GridFS is a specification for storing and retrieving files that exceed the BSON-document size limit of 16MB.

Instead of storing a file in a single document, GridFS divides a file into parts, or chunks [1], and stores each chunk as a separate document. By default, GridFS uses a chunk size of 255 KB; that is, GridFS divides a file into chunks of 255 KB with the exception of the last chunk. The last chunk is only as large as necessary. Similarly, files that are no larger than the chunk size only have a final chunk, using only as much space as needed plus some additional metadata.

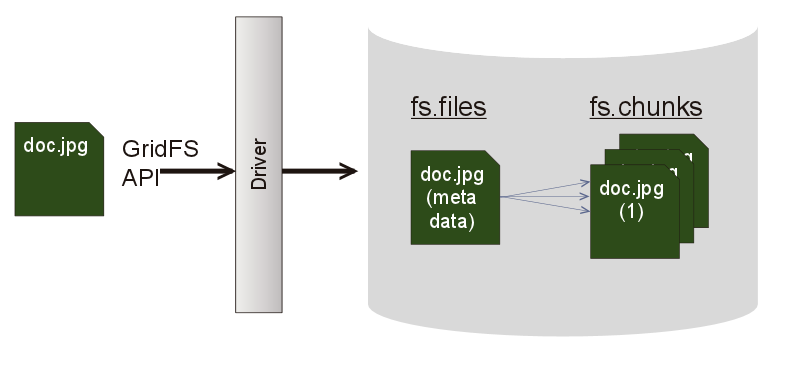

GridFS uses two collections to store files. One collection stores the file chunks, and the other stores file metadata. For more information, refer to GridFS Collections.

When you query a GridFS store for a file, the driver or client will reassemble the chunks as needed. You can perform range queries on files stored through GridFS. You also can access information from arbitrary sections of files, which allows you to “skip” into the middle of a video or audio file.

GridFS is useful not only for storing files that exceed 16MB but also for storing any files for which you want access without having to load the entire file into memory.

When should I use GridFS?

For documents in a MongoDB collection, you should always use GridFS for storing files larger than 16 MB.

In some situations, storing large files may be more efficient in a MongoDB database than on a system-level filesystem.

- If your filesystem limits the number of files in a directory, you can use GridFS to store as many files as needed.

- When you want to keep your files and metadata automatically synced and deployed across a number of systems and facilities. When using geographically distributed replica sets MongoDB can distribute files and their metadata automatically to a number of mongod instances and facilities.

- When you want to access information from portions of large files without having to load whole files into memory, you can use GridFS to recall sections of files without reading the entire file into memory.

Do not use GridFS if you need to update the content of the entire file atomically. As an alternative you can store multiple versions of each file and specify the current version of the file in the metadata. You can update the metadata field that indicates “latest” status in an atomic update after uploading the new version of the file, and later remove previous versions if needed.

Furthermore, if your files are all smaller the 16 MB BSON Document Size limit, consider storing the file manually within a single document. You may use the BinData data type to store the binary data.

Leaving files unsharded allows all the file metadata documents to live on the primary shard.

Use GridFS

To store and retrieve files using GridFS, use either of the following:

- A MongoDB driver. See the drivers documentation for information on using GridFS with your driver.

- The mongofiles command-line tool. See the mongofiles reference for documentation.

GridFS Collections

GridFS stores files in two collections:

- chunks stores the binary chunks.

- files stores the file’s metadata.

GridFS places the collections in a common bucket by prefixing each with the bucket name. By default, GridFS uses two collections with a bucket named fs:

- fs.files

- fs.chunks

You can choose a different bucket name, as well as create multiple buckets in a single database. The full collection name, which includes the bucket name, is subject to the namespace length limit.

The chunks Collection

Each document in the chunks[1] collection represents a distinct chunk of a file as represented in GridFS. Documents in this collection have the following form:

{

"_id" : <ObjectId>,

"files_id" : <ObjectId>,

"n" : <num>,

"data" : <binary>

}

A document from the chunks collection contains the following fields:

- chunks._id

- The unique ObjectId of the chunk.

- chunks.files_id

- The _id of the “parent” document, as specified in the files collection.

- chunks.n

- The sequence number of the chunk. GridFS numbers all chunks, starting with 0.

- chunks.data

- The chunk’s payload as a BSON Binary type.

The files Collection

Each document in the files collection represents a file in GridFS. Consider a document in the files collection, which has the following form:

{

"_id" : <ObjectId>,

"length" : <num>,

"chunkSize" : <num>,

"uploadDate" : <timestamp>,

"md5" : <hash>,

"filename" : <string>,

"contentType" : <string>,

"aliases" : <string array>,

"metadata" : <dataObject>,

}

Documents in the files collection contain some or all of the following fields:

- files._id

- The unique identifier for this document. The _id is of the data type you chose for the original document. The default type for MongoDB documents is BSON ObjectId.

- files.length

- The size of the document in bytes.

- files.chunkSize

- The size of each chunk in bytes. GridFS divides the document into chunks of size chunkSize, except for the last, which is only as large as needed. The default size is 255 kilobytes (kB).Changed in version 2.4.10: The default chunk size changed from 256 kB to 255 kB.

- files.uploadDate

- The date the document was first stored by GridFS. This value has the Date type.

- files.md5

- An MD5 hash of the complete file returned by the filemd5 command. This value has the String type.

- files.filename

- Optional. A human-readable name for the GridFS file.

- files.contentType

- Optional. A valid MIME type for the GridFS file.

- files.aliases

- Optional. An array of alias strings.

- files.metadata

- Optional. Any additional information you want to store.

Applications may create additional arbitrary fields.

GridFS Indexes

GridFS uses indexes on each of the chunks and files collections for efficiency. Drivers that conform to the GridFS specification automatically create these indexes for convenience. You can also create any additional indexes as desired to suit your application’s needs.

The chunks Index

GridFS uses a unique, compound index on the chunks collection using the files_id and n fields. This allows for efficient retrieval of chunks, as demonstrated in the following example:

db.fs.chunks.find( { files_id: myFileID } ).sort( { n: 1 } )

Drivers that conform to the GridFS specification will automatically ensure that this index exists before read and write operations. See the relevant driver documentation for the specific behavior of your GridFS application.

If this index does not exist, you can issue the following operation to create it using the mongo shell:

db.fs.chunks.createIndex( { files_id: 1, n: 1 }, { unique: true } );

The files Index

GridFS uses an index on the files collection using the filename and uploadDate fields. This index allows for efficient retrieval of files, as shown in this example:

db.fs.files.find( { filename: myFileName } ).sort( { uploadDate: 1 } )

Drivers that conform to the GridFS specification will automatically ensure that this index exists before read and write operations. See the relevant driver documentation for the specific behavior of your GridFS application.

If this index does not exist, you can issue the following operation to create it using the mongo shell:

db.fs.files.createIndex( { filename: 1, uploadDate: 1 } );

| [1] | (1, 2) The use of the term chunks in the context of GridFS is not related to the use of the term chunks in the context of sharding. |

Sharding GridFS

There are two collections to consider with gridfs - files and chunks.

If you need to shard a GridFS data store, use the chunks collection setting { files_id : 1, n : 1 }or { files_id : 1 } as the shard key index.

files_id is an objectid and changes monotonically.

You cannot use Hashed Sharding when sharding the chunks collection.

The files collection is small and only contains metadata. None of the required keys for GridfS lend themselves to an even distribution in a sharded environment. If you must shard the files collection, use the_id field, possibly in combination with an application field.

GridFS Index

GridFS uses a unique, compound index on the chunks collection for the files_id and n fields. The files_id field contains the _id of the chunk’s “parent” document. The n field contains the sequence number of the chunk. GridFS numbers all chunks, starting with 0. For descriptions of the documents and fields in the chunks collection.

The GridFS index allows efficient retrieval of chunks using the files_id and n values, as shown in the following example:

cursor = db.fs.chunks.find({files_id: myFileID}).sort({n:1});

See the relevant driver documentation for the specific behavior of your GridFS application. If your driver does not create this index, issue the following operation using the mongo shell:

db.fs.chunks.createIndex( { files_id: 1, n: 1 }, { unique: true } );